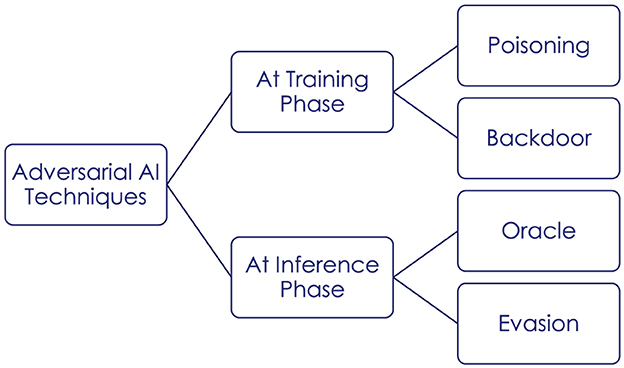

This paper addresses the critical gaps in existing AI risk management frameworks, emphasizing the neglect of human factors and the absence of metrics for socially related or human threats. Drawing from insights provided by NIST AI RFM and ENISA, the research underscores the need for understanding the limitations of human-AI interaction and the development of ethical and social measurements. The paper explores various dimensions of trustworthiness, covering legislation, AI cyber threat intelligence, and characteristics of AI adversaries. It delves into technical threats and vulnerabilities, including data access, poisoning, and backdoors, highlighting the importance of collaboration between cybersecurity engineers, AI experts, and social-psychology-behavior-ethics professionals. Furthermore, the socio-psychological threats associated with AI integration into society are examined, addressing issues such as bias, misinformation, and privacy erosion. The manuscript proposes a comprehensive approach to AI trustworthiness, combining technical and social mitigation measures, standards, and ongoing research initiatives. Additionally, it introduces innovative defense strategies, such as cyber-social exercises, digital clones, and conversational agents, to enhance understanding of adversary profiles and fortify AI security. The paper concludes with a call for interdisciplinary collaboration, awareness campaigns, and continuous research efforts to create a robust and resilient AI ecosystem aligned with ethical standards and societal expectations.