Building Trust in AI: The FAITH Framework

NEWSLETTER >

Artificial Intelligence (AI) is changing how we live and work – from helping doctors diagnose diseases to making transport safer and supporting public services. But as AI becomes part of critical decisions that affect people’s lives, the question of trust becomes essential: Can we rely on AI to act safely, fairly, and ethically? To answer this, the FAITH project is introducing the AI

Trustworthiness Assessment Framework (AI_TAF) – a structured, human-centric approach for ensuring that AI systems remain reliable and responsible throughout their entire lifecycle.

A New Human-Centric Perspective

Most existing AI risk frameworks focus mainly on technical controls such as data quality, cybersecurity, or algorithm performance. What makes FAITH’s AI_TAF unique is its focus on people – the teams who design, develop, and use AI. The framework introduces a key innovation: the evaluation of AI Team Trustworthiness Maturity, which examines the readiness and ethical awareness of the people behind AI systems. This approach recognises that trust in AI depends not only on machines but on humans too. Even the most advanced system can fail if those operating it lack the skills, understanding, or support to oversee it responsibly. By integrating social, ethical, and psychological aspects with technical safeguards, AI_TAF helps organisations build AI that is both safe and human-aligned.

A Structured Path to Trusted AI

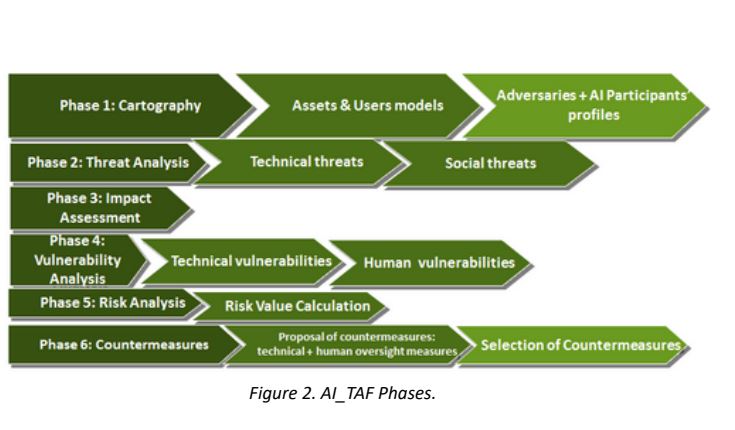

The AI_TAF follows a six-phase, iterative process inspired by international standards such as ISO 27005 and the NIST AI Risk Management Framework. These phases guide organisations

from identifying potential AI threats to implementing measures that enhance safety, transparency, and fairness. At every step, the human factor is considered – ensuring that oversight, training, and ethical reflection evolve alongside technical development.

In practice, this means AI_TAF can be applied to any system, from a hospital’s diagnostic tool to an autonomous vehicle, offering a balanced view of both technical risks and human

readiness. By combining these dimensions, organisations can make informed decisions, comply with regulations such as the EU AI Act, and, most importantly, strengthen public trust.

A Foundation for the Future

FAITH’s AI_TAF marks an important milestone towards trustworthy, responsible, and inclusive AI. It encourages collaboration between engineers, ethicists, and decision-makers to ensure that AI truly serves people and society. By focusing on both technology and the humans who shape it, FAITH sets the stage for a future where innovation and integrity go hand in hand.